Learn, Build, Scale: A Revenue Leader’s AI Transformation Roadmap

AI x GTM Summit → Session #6 with Kyle Norton

If you were forwarded this newsletter, join 6,333 weekly readers—some of the smartest GTM founders and operators—by subscribing here:

Thanks to the companies that made the AI x GTM Summit possible! (+stats/notable attendees)

Learn, Build, Scale: A Revenue Leader’s AI Transformation Roadmap

The Future-Ready Revenue Leader

We closed out the AI x GTM Summit with Kyle Norton, CRO at Owner.com.

Kyle is the most AI-pilled CRO in SaaS (that I know of). And he, and Owner, are crushing. A lot of that success traces back to their aggressive early adoption of AI in their go-to-market machine. Plus, he somehow has the time to share what he’s learning with other revenue readers on The Revenue Podcast (👈 check it out). He’s the goat.

And Kyle didn’t disappoint in his session. He gave us frameworks for thinking about AI transformation, what “AI-native” actually means for leaders, and how to prioritize use cases without wasting time on vaporware.

Here’s what he covered:

The AI reality (this is about survival, not the future)

What “AI-native” actually means (and what it doesn’t)

The new revenue leadership skill stack

AI transformation roadmap (data → capabilities → use cases)

The 5P prioritization framework

Adoption principles that actually work

Bonus: Generative + Deterministic

How much time does Kyle personally spend on AI?

Follow Kyle on LinkedIn!

[Suggested Spotify playlist while reading this series]

IYMI:

Session #1 → Sorting the World into your CRM (GTM gold is stuck in your top seller’s brain: How to extract + operationalize it) || YouTube video of Matthew and Andreas’ full session (22 mins)

Session #2 → Infrastructure for agentic GTM: data stack, orchestration, and activation || YouTube video of Nico’s full session (23 mins)

Session #3 → Getting agents deployed into your GTM systems: pitfalls, opportunities, and best practices || YouTube video of Joe’s full session (19 mins)

Session #4 → Nuanced Viewpoint on AI-Generated Outbound Email Copy | YouTube video of full session (22 mins)

Session #5 → Automating Signals that Work in Your Specific Market | YouTube video of full session (23 mins)

Alright, let’s get into it.

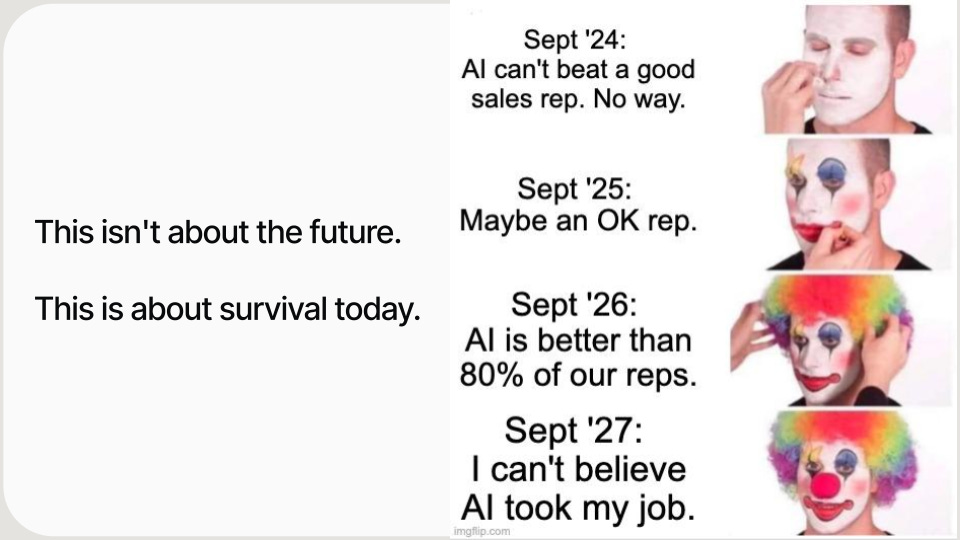

The AI reality (this is about survival, not the future)

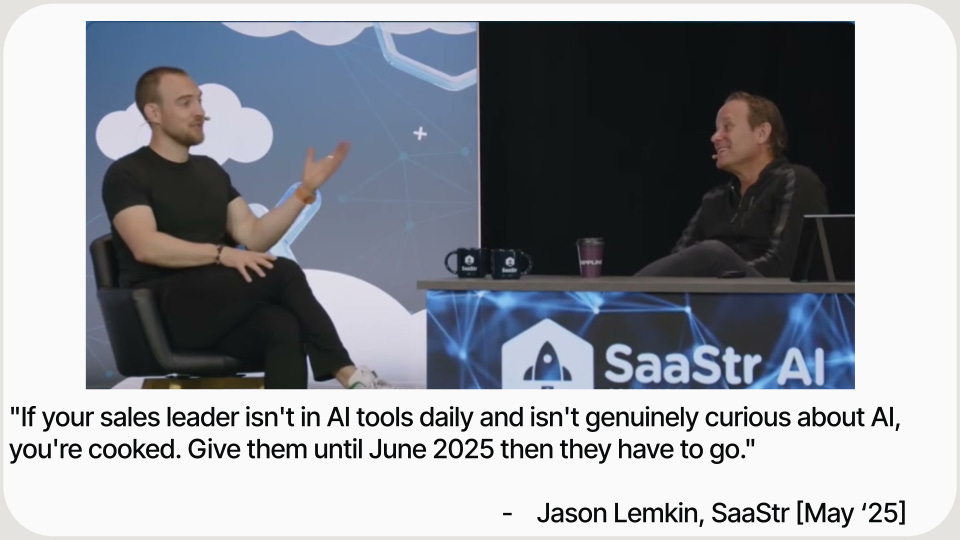

Kyle opened with a quote from Jason Lemkin at SaaStr:

That was from May 2025. The bar has only gone up since then.

Kyle’s perspective: maybe you’re not cooked in 2025. But in 2026? We’re going to see a great bifurcation in go-to-market outcomes. Companies using AI heavily to create leverage versus companies that don’t.

This isn’t theoretical anymore. Owner’s sales team is 3-4x more productive on a per-rep basis than their competitors. Kyle said his CFO thought he was doing the math wrong when they looked at OTEs to quota and outcomes.

Because they’ve stripped so much tedious, monotonous work from reps and used AI to make them more productive, Owner can reinvest those savings elsewhere. They can outspend competitors on brand, engineering, and growth. For instance, they hired Alex Hormozi’s head of media to run media for Owner (a hire you can only make if you’re not spending wildly on sales and marketing). Wild.

That advantage compounds. And it started with early AI adoption. Owner has nine high-impact AI use cases in production. Their first one went live in December 2022.

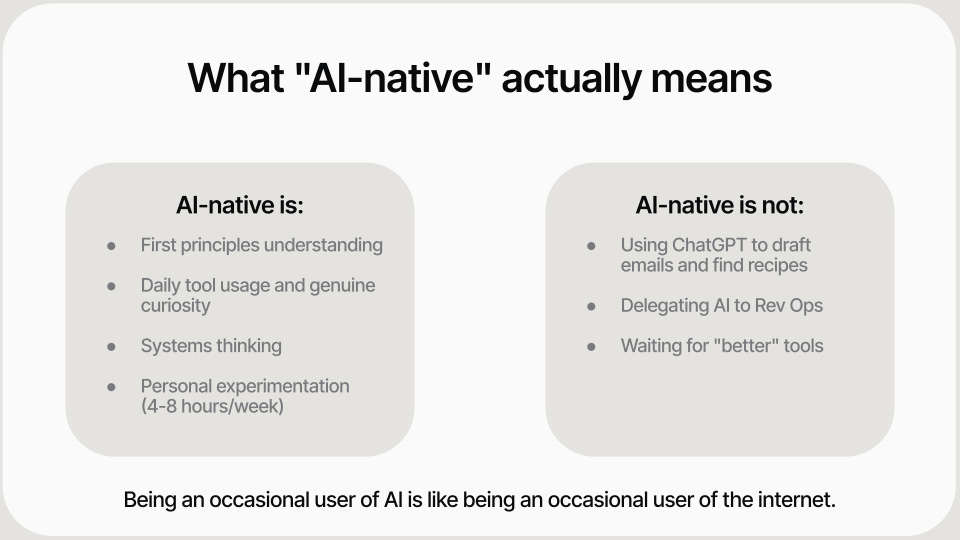

What “AI-native” actually means (and what it doesn’t)

Most people think “AI-native” means they use ChatGPT for answers and Salesforce formulas. That’s not the bar anymore.

You, the CRO or CMO, need to evaluate tools, pilot one, pick one, figure out the evals and context engineering, and get it into the world.

AI-native is:

First principles understanding (you know why models hallucinate, the difference between pre-training and post-training)

Daily tool usage and genuine curiosity

Systems thinking

Personal experimentation (4-8 hours per week minimum)

AI-native is NOT:

Using ChatGPT to draft emails and find recipes

Delegating AI to Rev Ops

Waiting for “better” tools

Being an occasional user of AI is like being an occasional user of the internet.

For a lot of Owner’s early implementations, Kyle was hands-on keyboard with his RevOps and data teams. He’s vibe-coded his own apps (none of which worked that well in production fwiw, he admitted). But going through the process—watching YouTube videos, building things, breaking things—is what builds fluency and intuition.

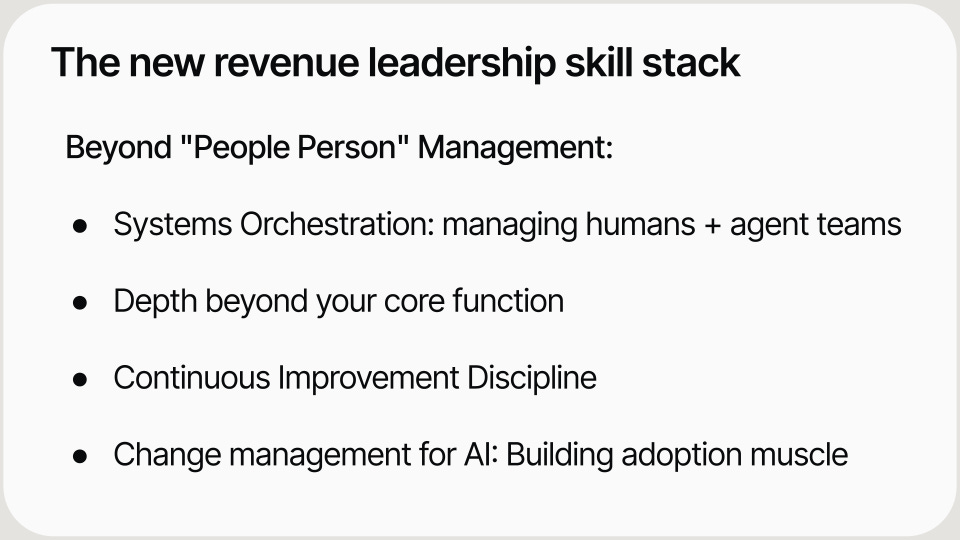

The new revenue leadership skill stack

The “people person” manager (who knows how to hire, train, coach reps, and bring home the number) archetype is going away.

Not everywhere at once. Enterprise will be slower. Markets without AI-native competitors will lag. But if you’re competing against a systems-engineering, AI-native thinker, you’ll be losing and won’t realize why until it’s too late.

Kyle laid out the new skill stack for revenue leaders:

1. Systems orchestration. You’re managing humans and agent teams now. You have to think about how all these things coordinate together.

2. Depth beyond your core function. If you’re a sales leader, you can’t just think about sales. You need to understand implications into onboarding, growth, CS. Agents might work across these functions. The lines are blurring.

3. Continuous improvement discipline. Be extremely analytical. Understand the math. Know how to improve the revenue system through data and experimentation.

4. Change management for AI. This is one that Kyle only recently added to the list. The companies that can change the fastest are going to win. Driving adoption is as important as the technical implementation.

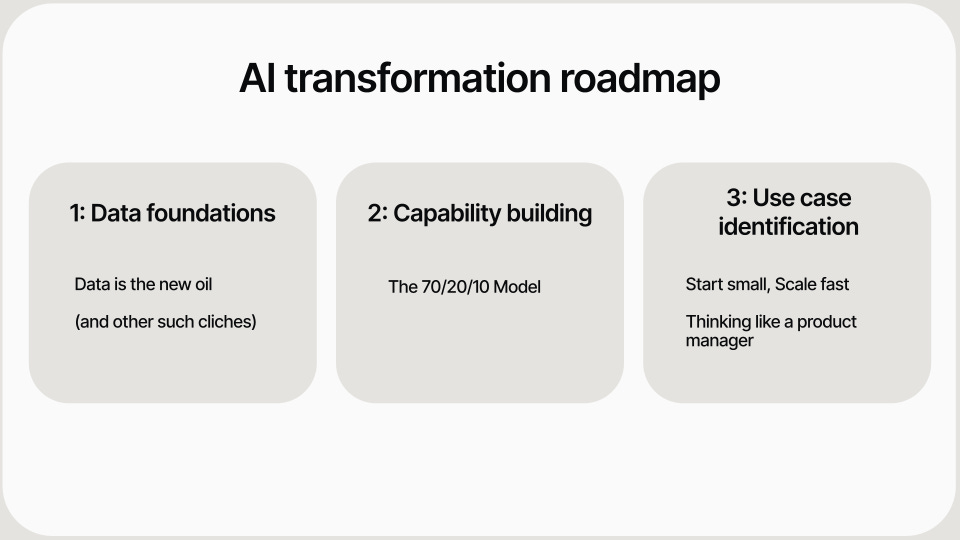

AI transformation roadmap (data → capabilities → use cases)

Kyle laid out three phases:

Phase 1: Data foundations

Nothing in AI works without good data. This is the “do not pass go, do not collect $200” moment.

Third-party data:

Market, account, person, contact, intent, signal data

Proprietary 3P data (stuff you can scrape and enrich that competitors don’t have) is a competitive moat

Most of your team is wasting most of their time because they don’t have the right data about expected value of next best action

First-party data:

What’s happening in your customer journey?

Data from your product, users, and team

Unify, clean, structure

High-impact first tool: AI CRM enrichment (Kyle uses Momentum)

Documentation:

AI loves written docs

More people are writing things specifically to be used by LLMs

Your knowledge base becomes your AI foundation

Phase 2: Capability building

Kyle’s 70/20/10 model:

70%: Level up leadership. You and your team need to become AI-fluent. Build AI literacy into leadership development. Do the work.

20%: Hire expertise. A GTM AI leader with a track record of building (not just talking). Usually sits in Rev Ops.

10%: Strategic consulting. Time-bound projects for specialized expertise. But don’t outsource the transformation.

What doesn’t work:

Hiring consultants to “lead transformation”

Expecting your current team to figure it out alone

Traditional training programs

What works:

Leading transformation from within (*note: I’ve seen this first-hand; ideally, it needs to come from the CEO/founders/execs)

Safe-to-fail experimentation environments

Learning culture, not training programs

Kyle suggested to find answers from your network, events like the AI x GTM Summit (kind of him!), Twitter/X, YouTube, and LinkedIn to a lesser extent.

Phase 3: Use case identification

Kyle called out the main issue where people randomly pick AI projects based on who calls them or cool things on LinkedIn. Projects peter out. Teams get fatigued. Then, when you want to implement the next thing, nobody believes you.

You need a prioritization framework.

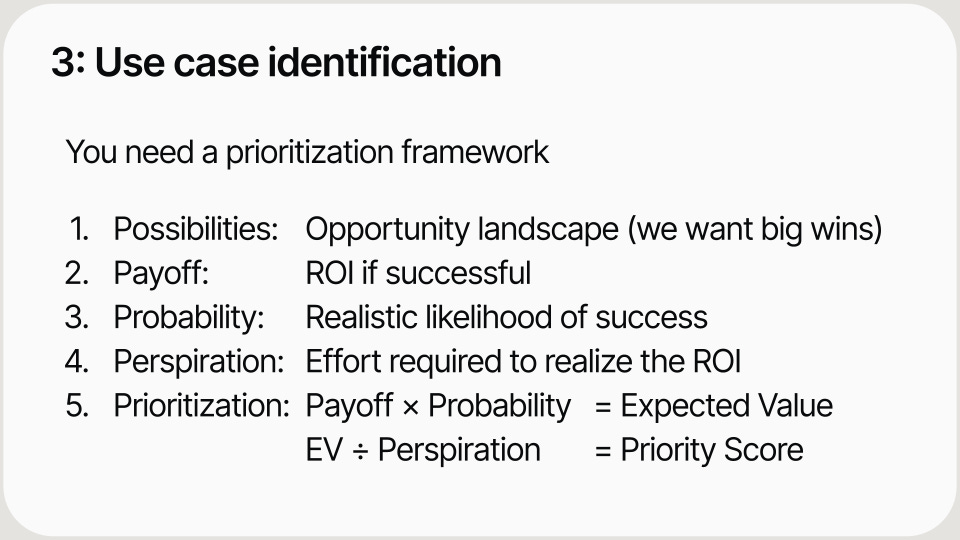

The 5P prioritization framework

Kyle adapted this from Annie Duke’s work (Thinking in Bets, How to Decide):

1. Possibilities. What’s your opportunity landscape? Map all the ways AI could improve your biggest priorities.

2. Payoff. If that thing works, what’s the ROI? You want big wins, not 5% improvements on small parts of the team.

3. Probability. What’s the realistic likelihood of success? How speculative is this? Some AI bets are well-proven now. Vet vendors by asking for references.

4. Perspiration. How much effort is required? Does it need product time, rev ops work, and marketing to completely change how they operate? That’s probably not set up for success.

5. Prioritization. Expected Value ÷ Perspiration = Priority Score

Example: If the payoff is $10M in pipeline and $1M in closed-won, and the probability is 50%, the expected value is $500K. Now divide by the effort required.

Down-weight stuff that has big potential payoff but insane effort. Find things with similar expected value but much less organizational lift.

Easy wins Kyle recommends:

Automating CRM enrichment after calls

Support automation

Inbound AI SDR

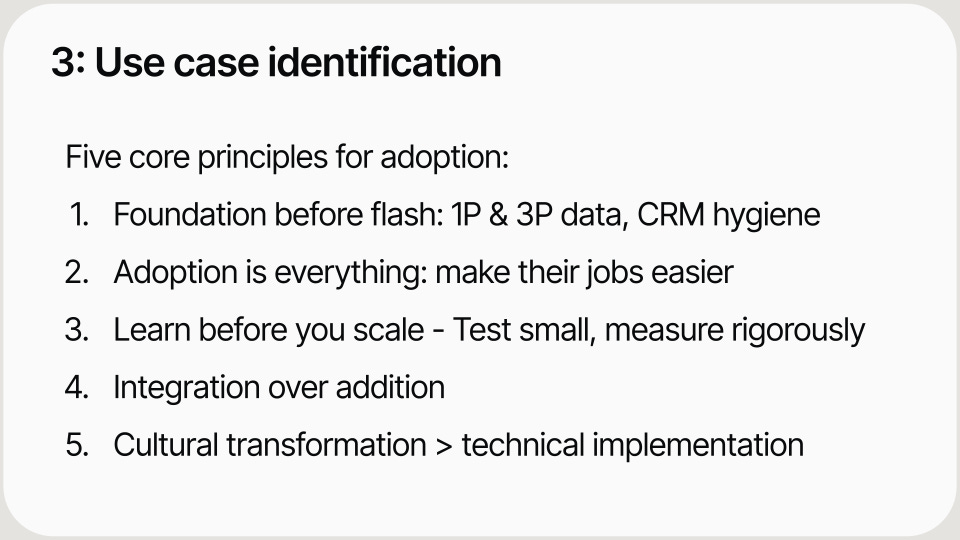

Adoption principles that actually work

Kyle’s five core principles:

1. Foundation before flash. Fix your 3P and 1P data. Ace CRM hygiene. Don’t worry about avatar-based sales or AI solution engineers until the foundations are solid.

2. Adoption is everything. Nothing matters unless reps actually use these tools. Early investments should make their jobs easier. If the language makes them feel like you’re trying to replace them, nothing will get traction.

3. Learn before you scale. Force vendors to do pilots. Get rigorous with references. Test in small groups of reps or small batches of data. Have a testing infrastructure. Evals should be on point.

4. Integration over addition. Don’t add new surfaces. If you add one, consider taking away one. Owner pushes most AI outputs to reps in Salesforce. Salesforce is their surface. Pre-call research happens elsewhere, but reps don’t have to click through to another tool.

5. Cultural transformation > technical implementation. Spend at least as much time on driving adoption as on technical components. Spotlight what works. Slow-roll to the organization. Don’t deploy through a Slack announcement and wonder why nobody uses your cool AI stuff.

Bonus: Generative + Deterministic

Kyle added a bonus tip: Many AI implementations fail because they’re stacking generative layers on top of one another. That’s how you get slop.

You need deterministic layers to provide structure. If a big part of a vendor’s value prop is how little work it requires (”We figure out your positioning, we identify your market, we pick prospects, we generate messaging, we send them...”), be skeptical. That’s a lot of generative steps with no human input or deterministic structure.

How much time does Kyle personally spend on AI?

Kyle personally spends 10+ hours learning and tinkering with AI each week.

Podcasts, Twitter, YouTube, playing with tools himself. He gave an example: Gemini 3 came out, and even though all his stuff is in ChatGPT with tons of memory and projects, he spent two hours that night running the same prompts in both tools, comparing outputs, testing atomic reasoning vs. chain of thought.

His wife is watching TV. He’s got one AirPod in, exploring.

It’s hard to even say how much time, because my life… every part of my day… is informed by AI now. Ten hours is just the exploration and learning stuff. Not an hour goes by that I’m not in one of these tools.

And even with that, he feels behind. Which made me feel better, because I constantly feel behind!

The Bottom Line

Kyle’s session was the perfect closer for the summit. It zoomed out from tactics to strategy—what it actually means to lead a revenue org in the AI era as a CRO of a fast-growing SaaS company.

The framework:

Get AI-native yourself. Daily tool usage. First principles understanding. Build something with your own hands.

Fix data foundations first. 3P and 1P data. Documentation. CRM hygiene. Don’t pass go until this is done.

Build capability internally. 70% leveling up your team, 20% hiring expertise, 10% strategic consulting. Don’t outsource the transformation.

Prioritize ruthlessly. Map possibilities, estimate payoff and probability, calculate expected value, divide by effort.

Drive adoption like your job depends on it. Because it does. Cultural transformation matters as much as technical implementation.

The companies that figure this out will be 3-4x more productive than their competitors. That advantage compounds.

This isn’t about the future. This is about survival today.

Watch the full session on YouTube (28 minutes):

Grateful for Kyle closing out the summit. If you want more from him, subscribe to the Revenue Leadership Podcast—it’s excellent.

Follow Kyle Norton on LinkedIn.

→ Kyle is CRO at Owner.com, host of the Revenue Leadership Podcast & SaaS GTM Advisor & Investor.

That’s a wrap on the AI x GTM Summit session recaps!

Thank you to all 645 of you who registered, attended live, asked questions, and engaged. Thank you to all six speakers for sharing so generously. And please go check out the awesome sponsors that made this event possible: Rox, Attention, Clarify, Sumble, Instantly.

Excited to publish more (deeply researched, opinionated) content in the coming months.

Thank you for your attention and trust,

Brendan 🫡

"Nothing in AI works without good data." Amen but also don't lack of data get into an 'action paralysis' either! Good data beats Lots of data that you'll never get to.

Enjoyed the sessions and these recaps - when's the next one?

Kyle's 70/20/10 model is one of the most honest things I've seen from a revenue leader on AI transformation. Most executives outsource the entire thing and wonder why nothing changes. The fact that he's spending 10+ hours a week personally tells his team something no memo ever could: this is real.

But what caught my attention was something he only recently added to his skill stack — change management for AI. And his fifth adoption principle: "Cultural transformation > technical implementation."

That's the part most organizations skip. Not because they disagree with it, but because they don't know how to execute it. They can map use cases, prioritize by expected value, fix CRM hygiene, all of that is structured and measurable. But when adoption stalls, the answer is usually upstream of the technology.

Kyle names it without naming it: "If the language makes them feel like you're trying to replace them, nothing will get traction." That's not a communication problem. That's an identity problem. And identity problems don't show up in a 5P prioritization framework.

The companies getting 3-4x productivity aren't just better at picking use cases. They've figured out the human layer, whether people feel safe experimenting, whether leadership has defined what "good" looks like at each role, whether the workflow actually changed or just got faster.

Owner.com clearly figured that out. The question for everyone else is: do you know which part of the human layer is actually blocking you? Because the answer is different for every organization, and most are guessing.