Infrastructure for agentic GTM: data stack, orchestration, and activation

AI x GTM Summit → Session #2 with Nico Druelle

If you were forwarded this newsletter, join 6,002 weekly readers—some of the smartest GTM founders and operators—by subscribing here:

Thanks to the companies that made the AI x GTM Summit possible! (+attendee stats)

Infrastructure for agentic GTM: data stack, orchestration, and activation

RevTech Lessons From The Trenches with Nico Druelle

Everyone’s talking about AI agents. But not many people are talking about what makes them actually work.

The unsexy answer: infrastructure. Data unification. Real-time enrichment. Orchestration logic. The plumbing underneath the shiny demos.

Without it, you’re running automation on top of a broken foundation. Your agents inherit your data problems—and execute them at scale.

In Session #2 at the AI x GTM Summit, Nico Druelle laid out what he’s seen working inside companies like Linear, Descript, Canva, and others. Nico runs The Revenue Architects and has spent the last year deep in the infrastructure that powers modern GTM motions: building ABM programs, real-time enrichment systems, and the orchestration layers that make agentic workflows possible.

His session was a masterclass in what it actually takes to build this stuff. Not theory. But hard-earned lessons from the trenches.

Here’s what he covered:

Doing ABM right (1st party data → custom signals → curate accounts)

Real-Time Enrichment (Canva’s PQL example)

Most teams still ignore the foundational gaps (3 pillars)

The prize: a post-CRM era (before/after comparison)

3-layered stack (unified data → orchestration → rep interface)

Building the right team (where to hire, reward, place)

Go follow Nico on LinkedIn!

Alright, let’s get into it.

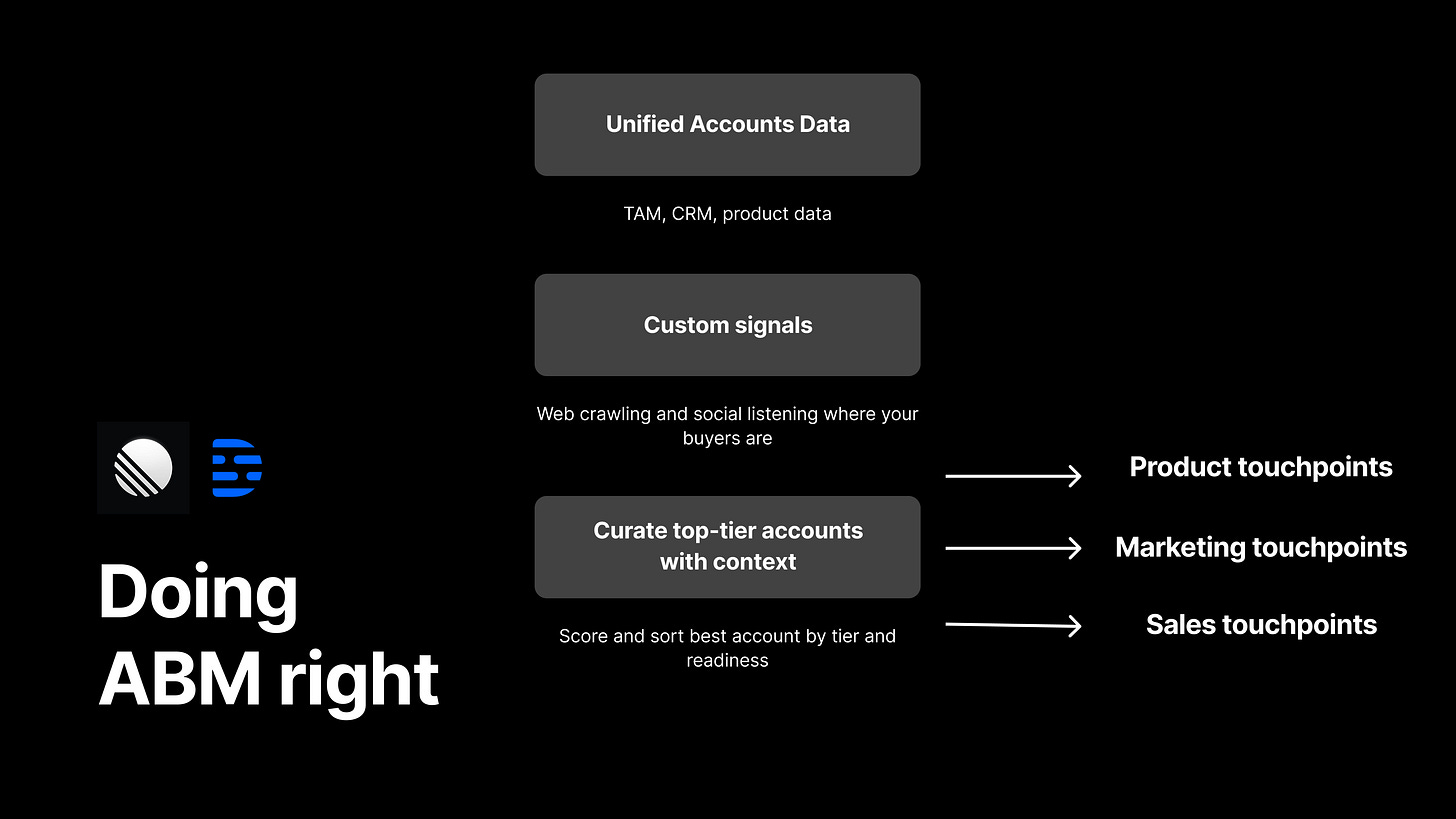

Doing ABM right (1st party data → custom signals → curate accounts)

Nico started with two companies that have cracked ABM: Linear and Descript.

Both are successful PLG companies. Both brought Nico in to build product-led sales motions. And both went straight into ABM—not as a marketing program, but as a company strategy.

Why ABM? Because single-channel GTM doesn’t work anymore.

Cold outbound is saturated. Paid is getting diluted. You need a coordinated mix of channels working together. That’s what ABM does well (when done right).

Nico walked through a three-step process:

Step 1: Data unification.

Build an internal “ABM brain.” Stitch together data from CRM, warehouse, LinkedIn paid ads, product usage—everything. Aggregate at the domain level. Then fetch additional domains in your TAM that haven’t been exposed to your sales motion or product yet.

The result: a unified representation of your entire addressable market.

Step 2: Custom signals and curation.

Layer 20-30 custom data points per account. Not generic firmographics. Stuff that actually matters for your ICP.

Nico gave examples:

Descript: Number of YouTube videos published in the last 30 days. (If you’re selling video editing software, this is gold.)

Linear: Percentage of engineering headcount against total company headcount. (Engineering-heavy orgs are their sweet spot.)

Run these signals through an ICP score. Rank all accounts. Then curate the top tier—start small, maybe 500 accounts—with alignment across product, marketing, and sales.

Step 3: Orchestration.

This is where most teams fall apart.

Orchestration means being crisp on the rules of engagement. Who owns what. What touchpoints happen through which channels. Human outreach or agent? Making sure you’re not crossfiring (eg: three different teams hitting the same account with conflicting messages on the same day).

To orchestrate well, you need visibility: Where is this person in the paid funnel? Where are they in the product? Where are they in the outbound sequence? Where are they in the CRM?

That requires the unified data layer from Step 1. Without it, you’re guessing.

The takeaway: ABM isn’t a campaign. It’s a system. Alignment + focus → clarity → momentum → lower CAC, better win rates.

Nico’s second example showed what’s possible when you take this data-first approach even further.

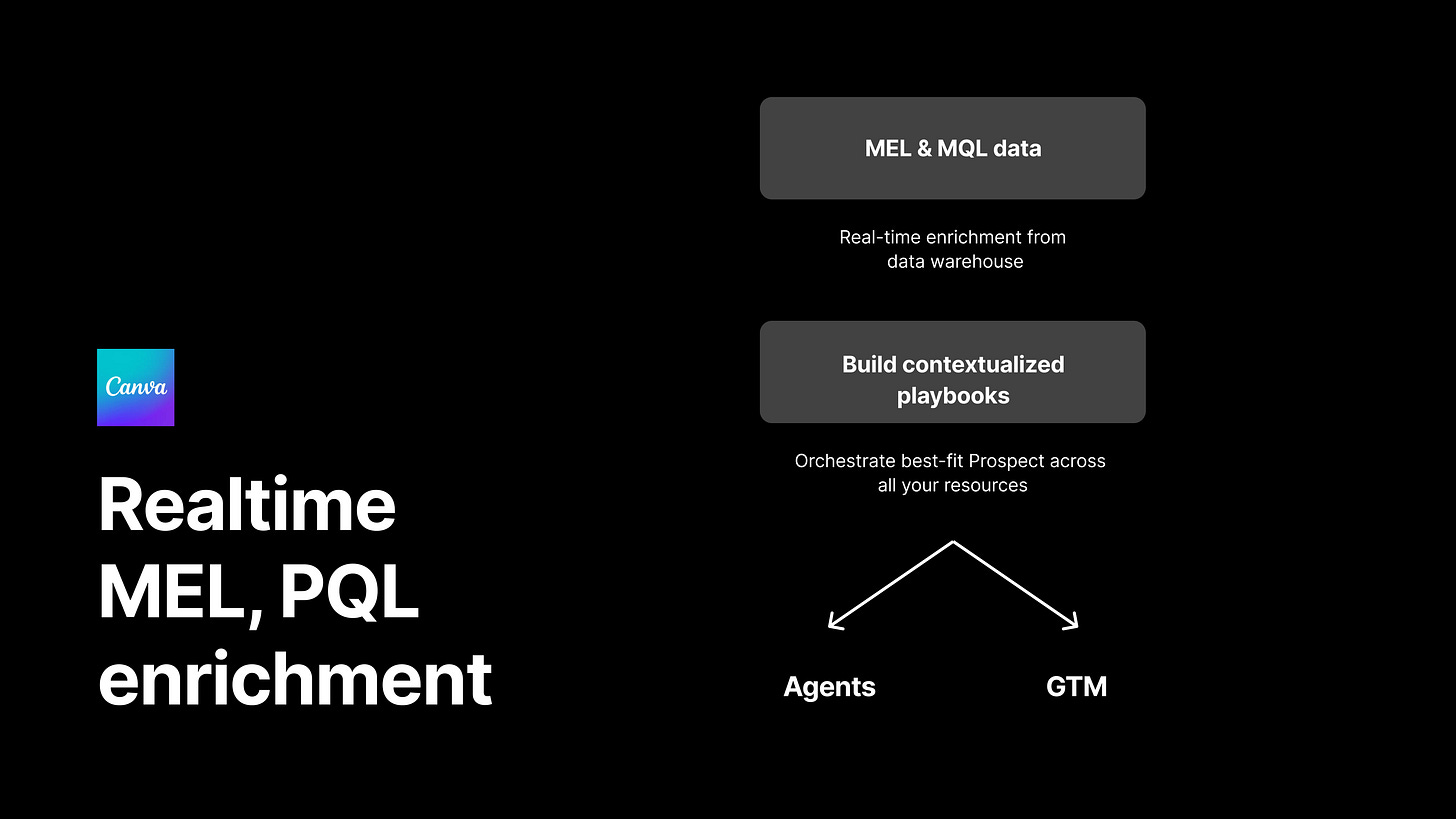

Real-Time Enrichment (Canva’s PQL example)

Nico spent Q2 and Q3 at Canva building something powerful: real-time enrichment on top of their data warehouse.

The use case: any email collected through the product or any marketing asset triggers immediate real-time enrichment. Not batch processing overnight.

That enriched record includes a fully contextualized view of the user—who they are, what company they’re at, what signals suggest about their intent and fit (ICP score), product usage patterns, marketing engagement history, etc.. All computed the moment the lead enters the system.

From there, the system makes a routing decision: Should this go into an agent flow? Or into a human-driven GTM play?

Here’s what that looks like in practice:

A free user signs up. Within seconds, the system enriches their profile and scores them. High-fit account, senior title, already activated core features? Route to an agent that triggers a personalized onboarding sequence and alerts an AE. Low-fit account, junior title, casual usage? Route to a nurture flow—no human touch needed.

The key: this decision happens instantly, based on complete context. Not hours later when a batch job runs. Not days later when an SDR manually reviews the lead. Right now.

This is the infrastructure that makes agentic GTM possible. Without real-time enrichment, you’re always working with stale data. Your agents are making decisions based on yesterday’s picture. Your reps are reaching out to people who’ve already churned or converted.

The companies investing in this layer now are going to have a serious advantage because their systems can act with the same context a human would have, but at machine speed.

That’s what good looks like. But from what I’ve seen, most teams aren’t there yet.

And in the AI era, the cost of not fixing this has gone up dramatically. Data quality has always mattered. But when humans were the only ones acting on data, bad data meant inefficient reps. Now, with agents and automation, bad data means bad decisions executed at scale—faster than anyone can catch them. Mistakes compound with AI.

The gap between teams who have this infrastructure and teams who don’t is about to widen results. Exponentially.

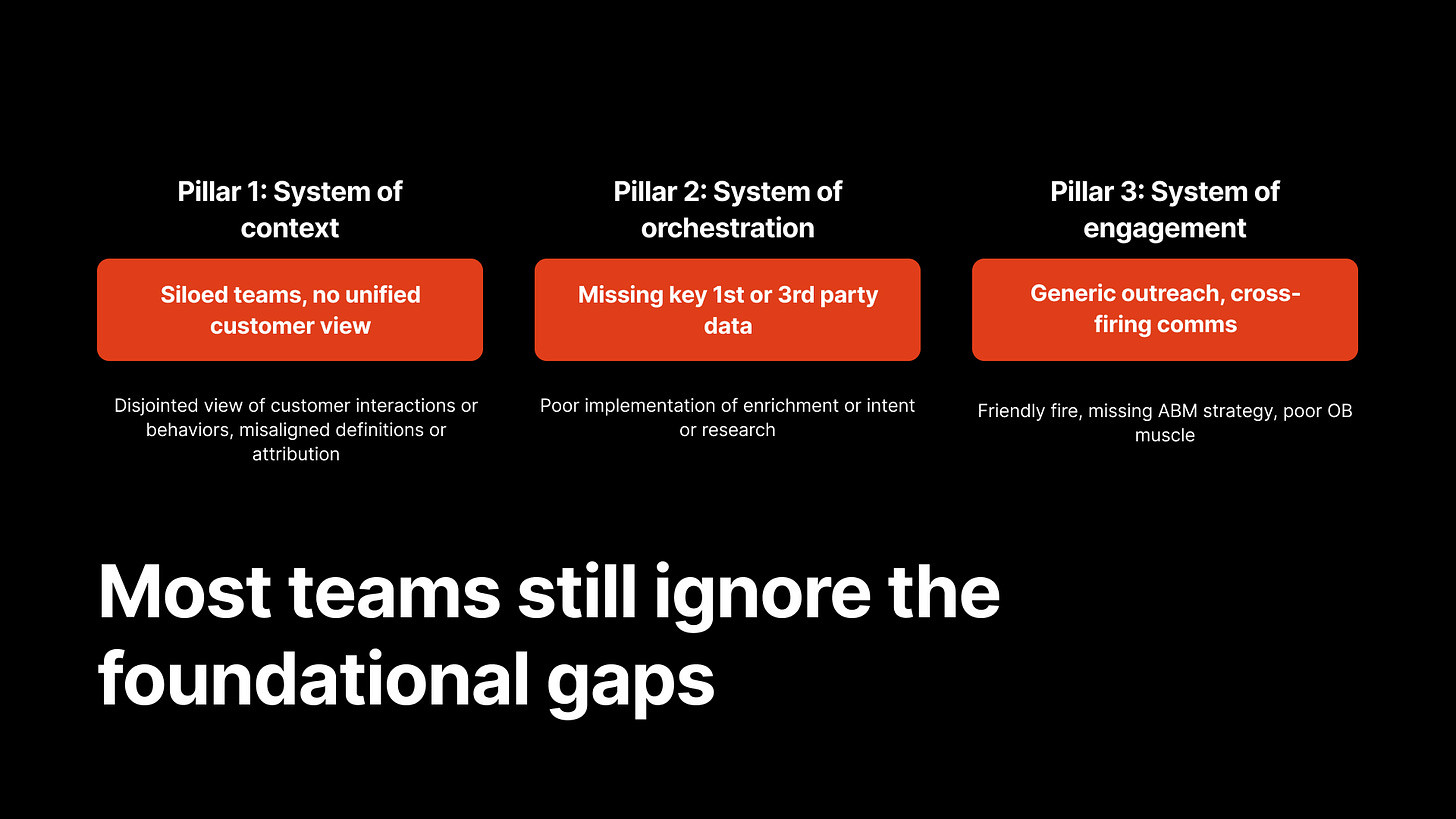

Most teams still ignore the foundational gaps (3 pillars)

Before you can build the future, you have to fix the foundation. And, again, most teams haven’t.

Nico laid out three pillars where he sees consistent gaps:

Pillar 1: System of Orchestration

The problem: Missing key 1st or 3rd party data. Poor implementation of enrichment, intent, or research.

If your data foundation is broken, everything downstream breaks too. You can’t orchestrate what you can’t see.

Pillar 2: System of Context

The problem: Siloed teams with no unified customer view. Disjointed understanding of customer interactions. Misaligned definitions. Broken attribution.

Marketing thinks an account is cold. Sales thinks it’s hot. Product thinks it churned. Nobody’s looking at the same picture.

Pillar 3: System of Engagement

The problem: Generic outreach. Crossfiring communications. Missing ABM strategy. Weak outbound muscle.

This is where “friendly fire” happens. Three people from your company email the same prospect in one week with different asks. The prospect gets annoyed. The deal dies.

Nico’s point: these aren’t advanced problems. They’re foundational. And most teams skip past them to chase shiny tools.

Fix the foundation first. Then you earn the right to build what comes next.

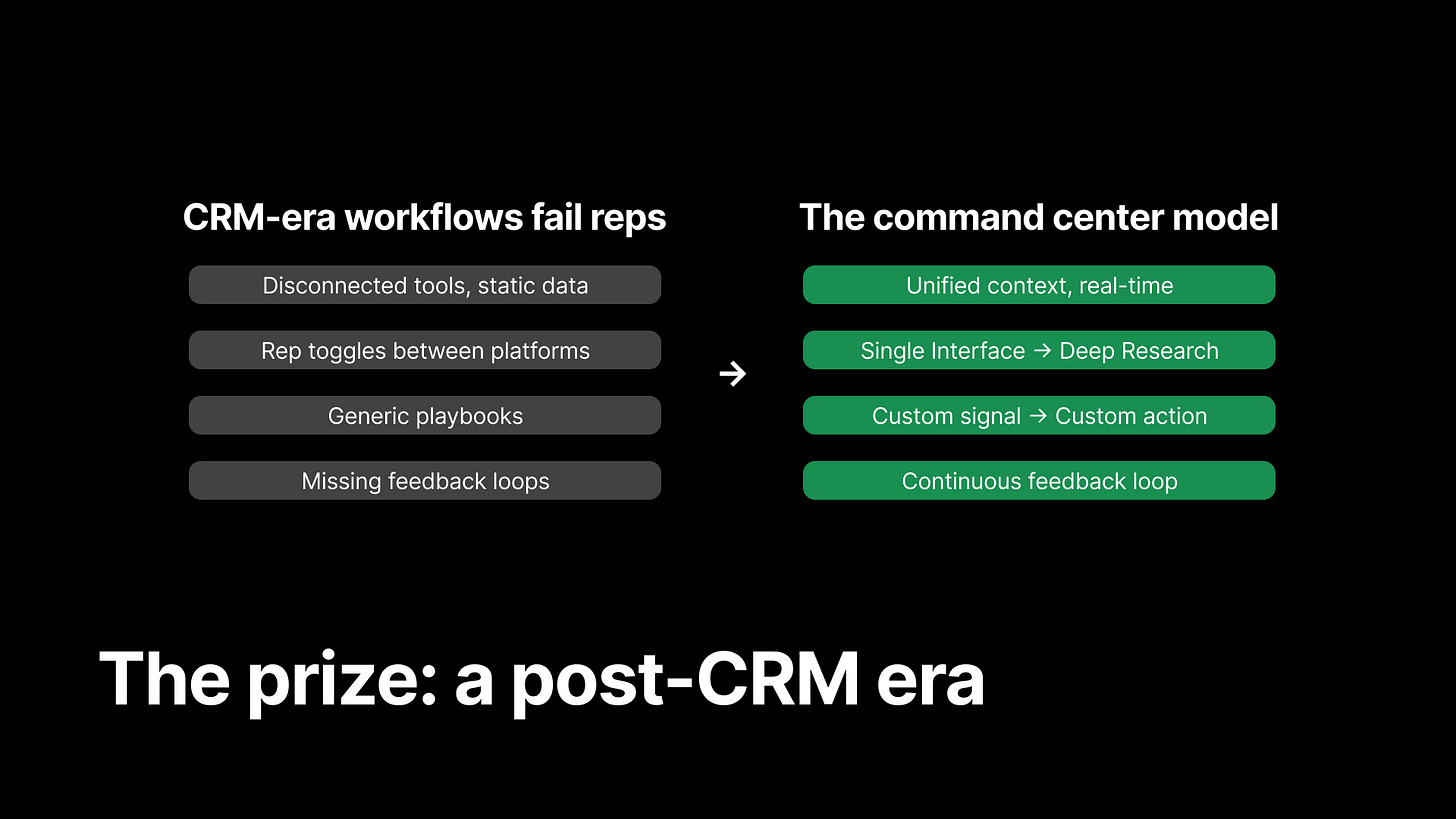

The prize: a post-CRM era (before/after comparison)

So what does “good” look like?

Nico painted a picture of two worlds:

CRM-era workflows (the old way):

Disconnected tools, static data

Rep toggles between platforms

Generic playbooks

Missing feedback loops

The command center model (the new way):

Unified context, real-time

Rep works in one place

Custom signal → action

Continuous feedback loop

In the old model, the rep is the integration layer. Reps are manually stitching together information (context) from Salesforce, LinkedIn, Gong, Slack, the product, and their own memory. That’s exhausting. And it doesn’t scale.

In the new model, the system does the stitching. The rep shows up to a “command center” that already knows everything—and surfaces the right action at the right time.

Nico believes this is where the most productive teams are heading. Some are already experimenting with it. The productivity gains are real. (And I couldn’t agree more!)

So how do you actually build this?

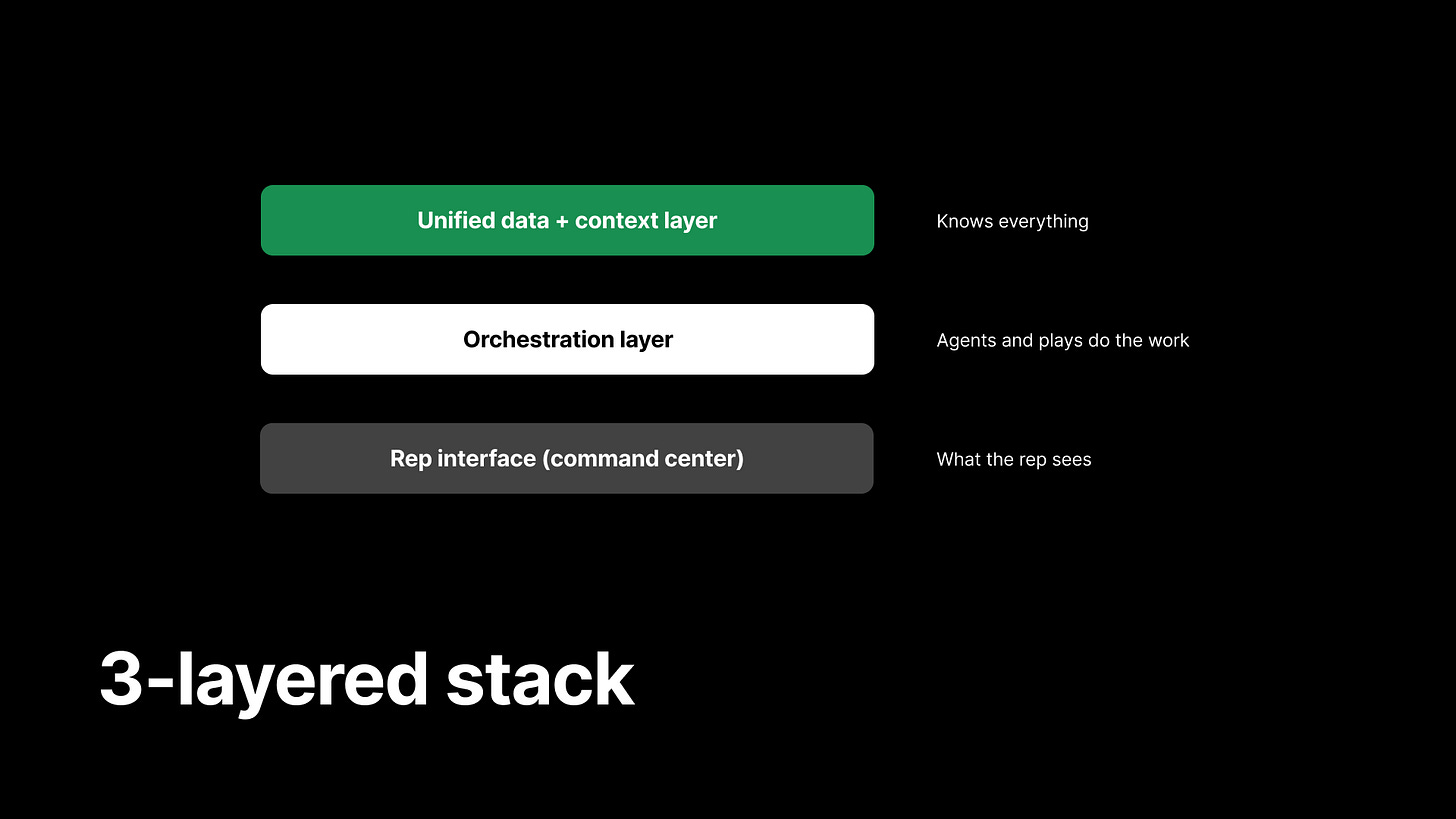

3-layered stack (unified data → orchestration → rep interface)

To build the command center, you need the right architecture. Nico broke it into three layers:

Layer 1: Unified Data + Context Layer

This is the brain. It knows everything—CRM data, product data, marketing data, enrichment, signals, conversation history. All stitched together at the account and contact level.

Layer 2: Orchestration Layer

This is where agents and plays live. The logic that decides: what action should happen next? Who should take it? When?

Layer 3: Rep Interface (Command Center)

This is what the rep actually sees. A single surface that pulls from the context layer and the orchestration layer to show: here’s your priority account, here’s why, here’s what to do.

The key insight: most teams try to build Layer 3 first. They buy a shiny new tool and hope it solves everything. But without Layers 1 and 2, the command center has nothing to show.

Start with the data. Then build the orchestration. Then build the interface.

The architecture is clear. But who actually builds it?

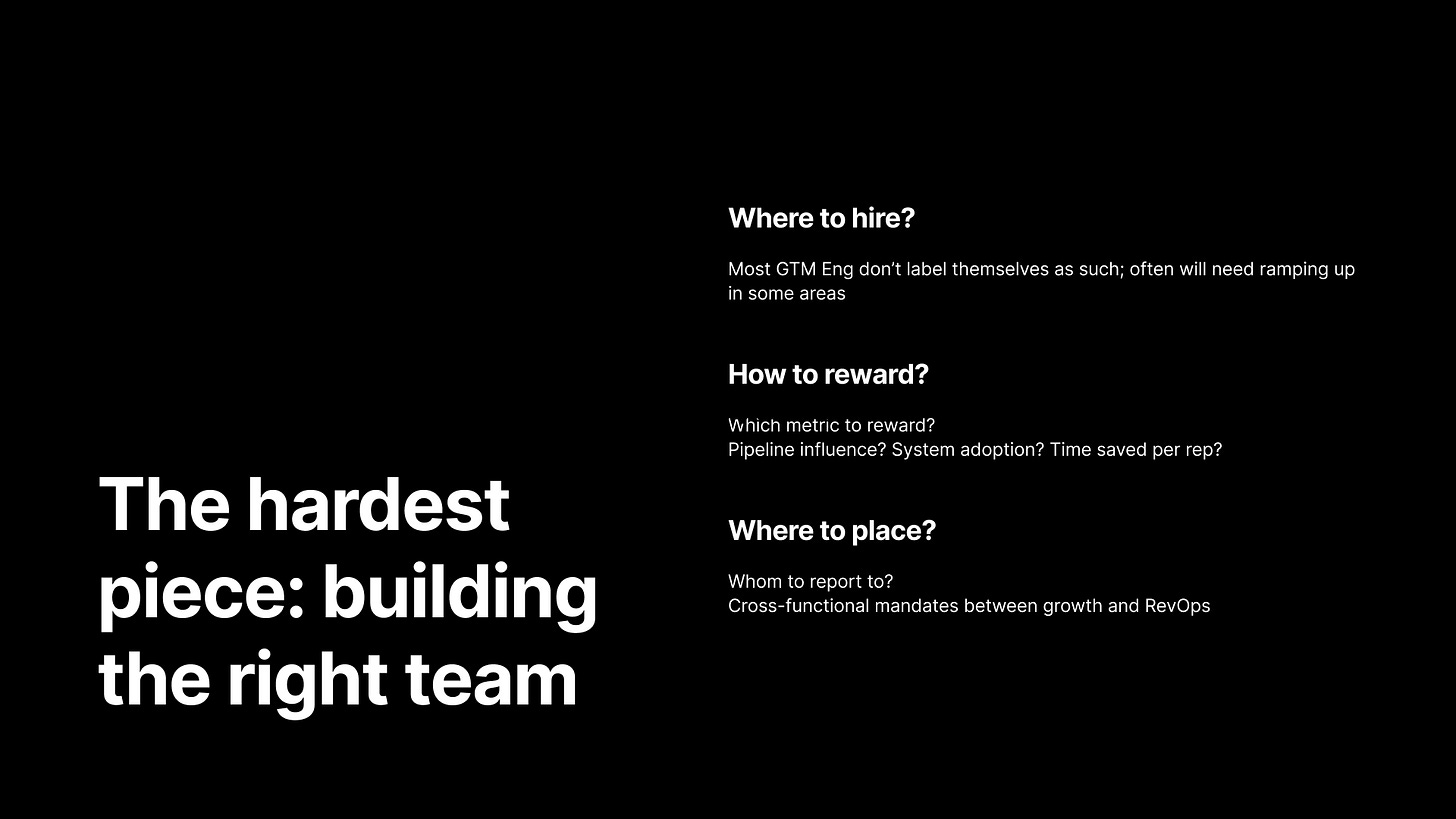

Building the right team (where to hire, reward, place)

The biggest bottleneck Nico sees is staffing this modern GTM engine.

GTM engineering is a new function. There are maybe a few hundred people on LinkedIn with that job title. The talent pool is tiny.

So where do you find people?

First option: Adjacent roles

Look for people in GTM systems, GTM technology, revenue operations, or growth operations. They already understand the motion and the metrics. The ramp-up is manageable.

Second option: Data engineering and analytics

Especially if they’ve been exposed to GTM projects—building dashboards for marketing or sales leaders, for example. They have the data skills. They understand the stakeholders. Small jump to GTM engineering.

Third option: Solution engineers

They’re technical. They understand buyer problems. They know how to translate between business and systems.

Fourth option: Young SDRs, CSMs, or AEs

Specifically: people from quantitative fields with systems-thinking minds. They have context on the sales motion. They might need more ramp-up on the technical side, but when it works, it works well.

How to reward them:

Nico likes an 80/20 split (base/variable). The variable component is tricky—pipeline created is one option, but GTM engineers work across the funnel. Retention, AE productivity, expansion. It varies.

His recommendation: track number of experiments launched and number of successful experiments. Velocity of experimentation is a leading indicator of quality.

Where to place them:

Reporting structure matters less than squad composition. Nico’s seen GTM engineers report to Head of Growth, Head of RevOps, even VP of Sales. What matters is the team around them:

Someone who owns the experiment roadmap (Head of Growth, GTM PM, etc.)

A data analyst to build reports and handle data structure

A CRM/systems person for complex integrations

The goal: let the GTM engineer focus on launching experiments and learning, not getting stuck in Salesforce permission hell.

The Bottom Line

Nico’s session was a reminder that the “AI-native GTM” future everyone’s talking about requires real infrastructure.

You can’t bolt agents onto a broken foundation. You need:

Unified data — everything in one place, at the account and contact level

Real-time enrichment — so your systems work with current information, not stale snapshots

Orchestration logic — rules that determine what happens next and who does it

A command center — a single interface where reps can actually work

And you need people who can build this. GTM engineers. A new function for a new era.

Data has always mattered. But in the age of agents, it’s the difference between automation that helps and automation that hurts. The teams that invest in this infrastructure now will compound their advantage. The teams that don’t will wonder why their AI tools aren’t working.

The foundation is the unlock.

Q&A Highlight

One audience question stood out:

“If the context for effective agents is scattered across dozens of tools—Slack, project management, CRMs, Google Drive, Notion, Gong—who builds and maintains the integrations and ingestion pipelines? Is this internal? A mix of vendors?”

Nico’s answer: two approaches.

Option 1: Point solutions (“buy”). Tools like Octave that connect to Gong and other context sources, then expose that context via API or MCP. Self-reinforcing—every new transcript adds potential new ICP signals.

Option 2: Build a knowledge graph in-house (“build”). Store all your context, build a knowledge graph, and have your agents tap into it.

Who owns it? Usually someone on the GTM systems team or a GTM engineer.

Watch the full session on YouTube (~20 minutes):

I’m incredibly grateful for Nico sharing his hard-earned knowledge on building modern GTM systems so openly with us.

Follow Nico Druelle on LinkedIn.

→ Nico runs The Revenue Architects, helping companies like Linear, Descript, Canva, Kong, Scriptwork, Resolve AI, and Attention build modern GTM Systems designed for AI.

If you’re looking for help building your data stack, orchestration layer, or GTM engineering function, shoot him a DM on LinkedIn (especially if you’re a PLG company). He’s one of the best in the game.

ICYMI: Session #1 summary: Sorting the World into your CRM (GTM gold is stuck in your top seller’s brain: How to extract + operationalize it).

Session #3 summary coming next: Getting agents deployed into your GTM systems: pitfalls, opportunities, and best practices.

Thank you for your attention and trust,

Brendan 🫡

Today, go-to-market messaging operates in a fragmented, reactive, and event-driven way.

- Emails and campaigns are triggered by isolated signals (webinars, usage thresholds, etc.)

- Different teams (AEs, SDRs, post-sales, marketing ops) run separate systems with overlapping campaigns, data models, logic, and context.

- Account strategy decisions are made without a unified view of what an account actually cares about, who matters inside the account, what has already been communicated, and how prior messages have resonated.

- High-value accounts and personas are treated similarly to long-tail accounts, and messaging cannot be scaled without becoming noise.

I'm hopeful that the layers of the new GTM stack that are laid out here will be a game changer for the innovators!

This resonates with me. Totally makes sense. These are the core principles we are following in https://www.tapistro.com