Sorting the World into your CRM (GTM gold is stuck in your top seller’s brain: How to extract + operationalize it)

AI x GTM Summit • Session #1 with Andreas Wernicke and Matthew Silberman

If you were forwarded this newsletter, join 5,883 weekly readers—some of the smartest GTM founders and operators—by subscribing here:

Thanks to the companies who made the AI x GTM Summit possible! (+attendee stats)

Sorting the World into Your CRM

GTM Gold Is Stuck in Your Top Seller’s Brain: How to Extract and Operationalize It

Your best rep knows something the rest of the team doesn’t.

They can look at an account and feel whether it’s a fit. They can scan a LinkedIn profile and know if someone’s worth the call. They’ve spent years absorbing patterns, building intuition, and developing taste. And all of that knowledge lives in their head.

This tacit knowledge is the stuff you know but can’t easily explain. Ask a top performer why they chose to prioritize one account over another, and they’ll often struggle to articulate it. It’s years of pattern recognition compressed into instinct.

Tacit knowledge is incredibly valuable. But, it’s also fragile.

When they get promoted, it walks out the door with them. When they leave for a competitor, they take the playbook too.

This is the central problem of go-to-market right now. We’ve spent decades building systems to capture activity data. Calls logged. Emails sent. Opportunities created. But the actual intelligence that makes top performers perform? That’s never been systematized.

Until now.

At the AI x GTM Summit, Matthew Silberman (founder of Terra Firma) and Andreas Wernicke (founder of Snowball Consult) laid out a practical roadmap for extracting the tacit knowledge trapped in your best sellers’ brains and how to turn it into scalable, repeatable GTM infrastructure.

Here’s what they covered:

AI: The age of intuition

Now you ICP it, now you don’t

Solve all your problems (in just four easy steps!)

Step 5/∞: ABC, Always be…calibrating

Unstructured world → Structured

AI agent misconceptions

AI agent building in 2026

A real example: qualifying LinkedIn engagers

22-minute video of the full session (Youtube)

Follow Matthew and Andreas on LinkedIn!

Alright, let’s get into it.

AI: The Age of Intuition

Matthew started by naming what everyone’s thinking: no one wants to contribute to the AI slop machine.

It’s already bad enough. And some predictions for 2026 suggest email and other channels are going to be totally destroyed by low-quality AI-generated content. We might all cross a threshold as humanity and start to believe that any online interaction is AI-mediated in some way.

But the most valuable resource will remain human. Taste, human touch, creativity, intuition. You know it when you see it. And your team probably already has it.

The formula Matthew laid out: humans invest, learn, and intuit. AI structures and scales.

Now You ICP It, Now You Don’t

Most companies define their ICP once. Maybe twice. They use the same tired firmographics: industry, headcount, revenue range. And then they wonder why their outbound isn’t converting.

Or worse, when you ask them who their ICP is, they say: “We sell to whoever buys.”

But your top sellers don’t think in firmographics. They think in patterns. When Matthew interviews GTM teams, he digs past the surface-level definitions. He asks about specific closed-won deals. Play by play. What happened in that first discovery call? Why did the prospect take the meeting? What had they experienced the week before that made the timing perfect?

These are the details that actually matter. And they’re almost never captured in your CRM.

Your market changes fast. But most GTM teams don’t change their ICP definitions at that same pace. That’s the gap.

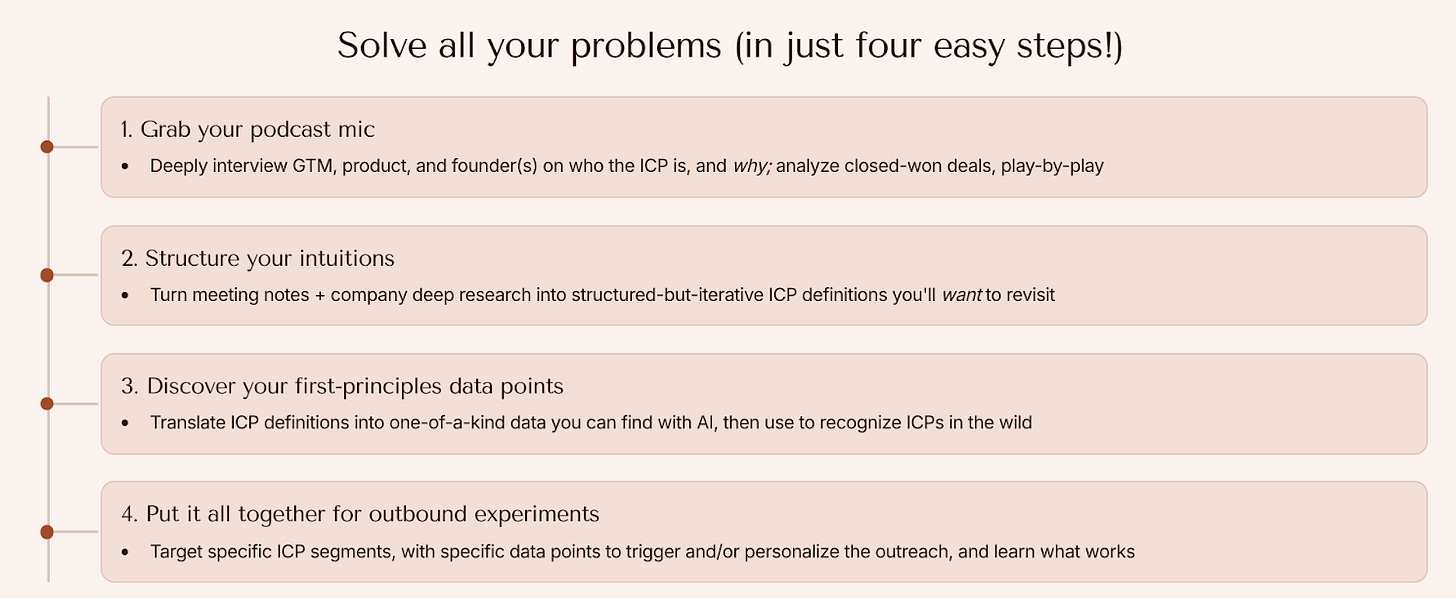

Solve All Your Problems (In Just Four Easy Steps!)

Matthew’s framework is deceptively simple. Four steps. But don’t let that fool you. The real work is in the depth, not the complexity. “Simple, but not easy.”

Step 1: Grab your podcast mic.

This is where Matthew’s journalism background shows up. He treats internal interviews like investigative reporting. Deep conversations with GTM leaders, product teams, and founders. Analyzing closed-won deals with the rigor of a post-mortem. Recording everything.

The goal isn’t to ask “Who is our ICP?” The goal is to ask “Why did this specific deal close?” and then keep asking follow-up questions until you hit something real.

Step 2: Structure your intuitions.

Raw meeting notes are useless if they sit in a folder. Matthew uses a set of AI prompts (built inside Notion) to transform interview transcripts into structured ICP definitions. These aren’t your typical “Series B SaaS companies with 50-200 employees” descriptions. They go much deeper.

The output is something you’ll actually want to revisit. Something you can refine over time as you learn more.

Step 3: Discover your first-principles data points.

This is where it clicks. Once you have structured ICP definitions, you run another prompt to extract the niche data points behind those definitions.

Think about it this way: if you met a perfect-fit account in the wild, how would you know? What signals would tell you they’re qualified? These are the data points you need to enrich. And they’re almost never the standard fields you’d find in a data provider.

Step 4: Put it all together for outbound experiments.

The final prompt takes your personas and data points and generates a list of plays to run. Specific combinations of who to target, what signals to use, and how to craft the message.

Then you test. You see what books meetings. Or don’t. And you feed those outcomes back into the system.

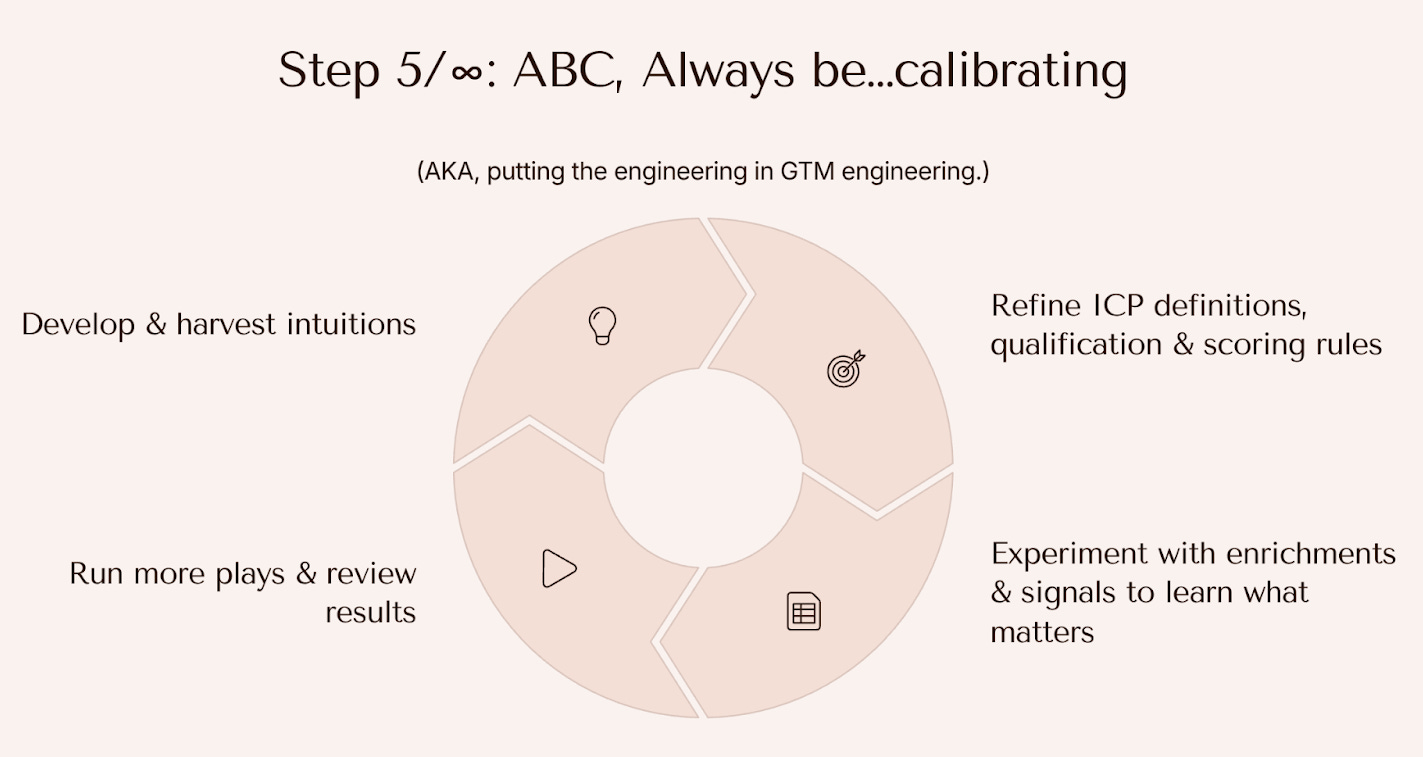

Step 5/∞: ABC, Always Be…Calibrating

Matthew made a point that stuck with me: this is why it’s called GTM engineering.

It’s not a one-time project. It’s not a linear process where you complete step four and you’re done. It’s circular. Iterative. Ongoing.

You build intuitions. You refine your ICP definitions. You experiment with enrichments to learn what actually matters. You run plays and review results. Then you go back to the beginning and harvest more intuitions based on what you learned.

GTM is a living, breathing thing. Always evolving. Just like a product does. Or your CRM. It’s never “done.”

The diagram in Matthew’s slides shows this as a continuous loop. Four quadrants, always spinning:

Build and harvest intuitions

Refine definitions and scoring rules

Experiment with enrichments and signals

Run plays and review results

If you’re treating ICP work as a quarterly exercise, you’re doing it wrong.

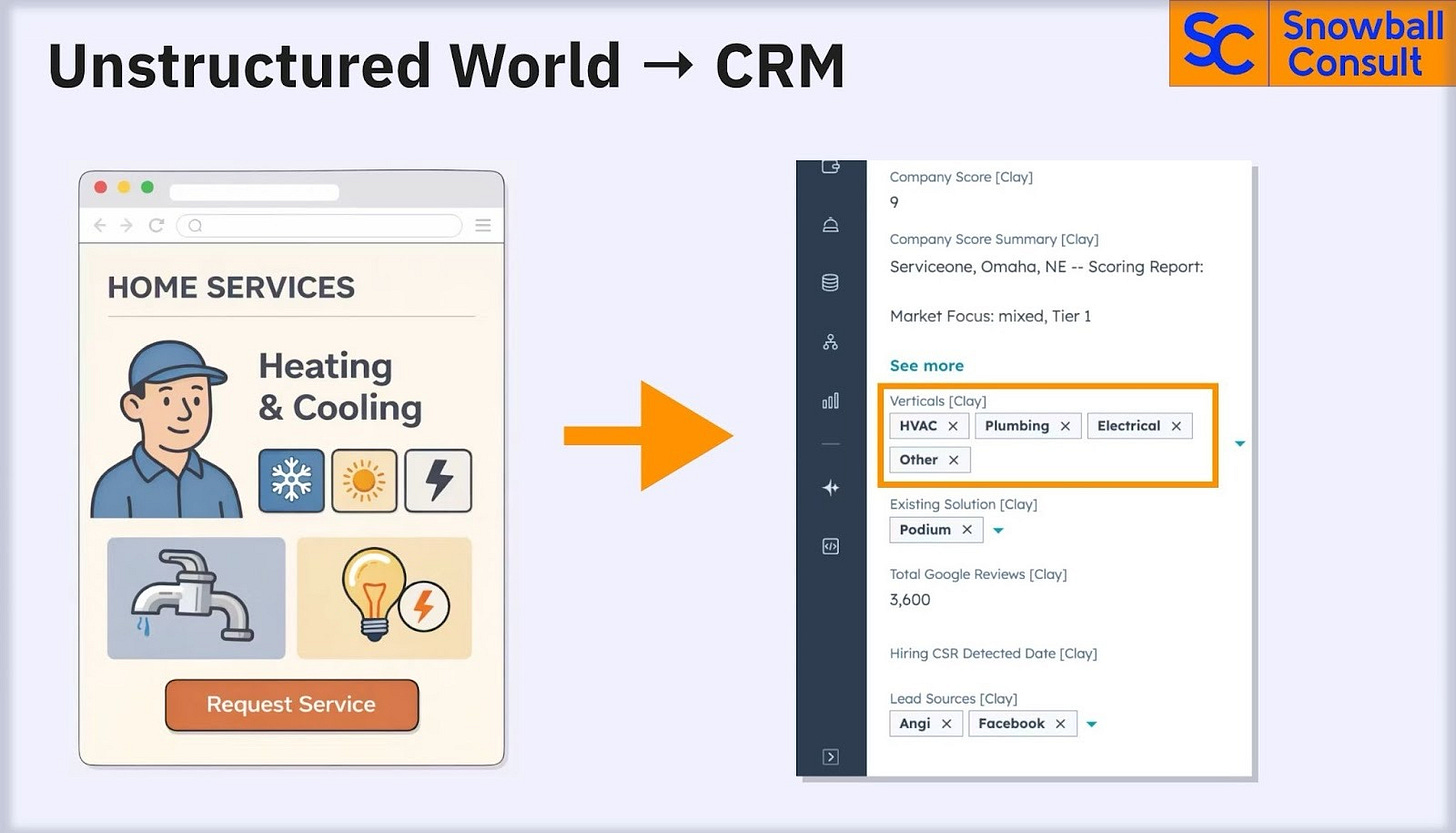

Unstructured World → Structured

After Matthew laid out the strategy, Andreas took the baton to walk through the execution.

His opening slide summarized the talk: “How to Sort the Messy World.”

Because that’s what AI agents actually do. They take unstructured information from websites, LinkedIn profiles, job postings, and public data, and they turn it into structured fields in your CRM. The messy world, sorted.

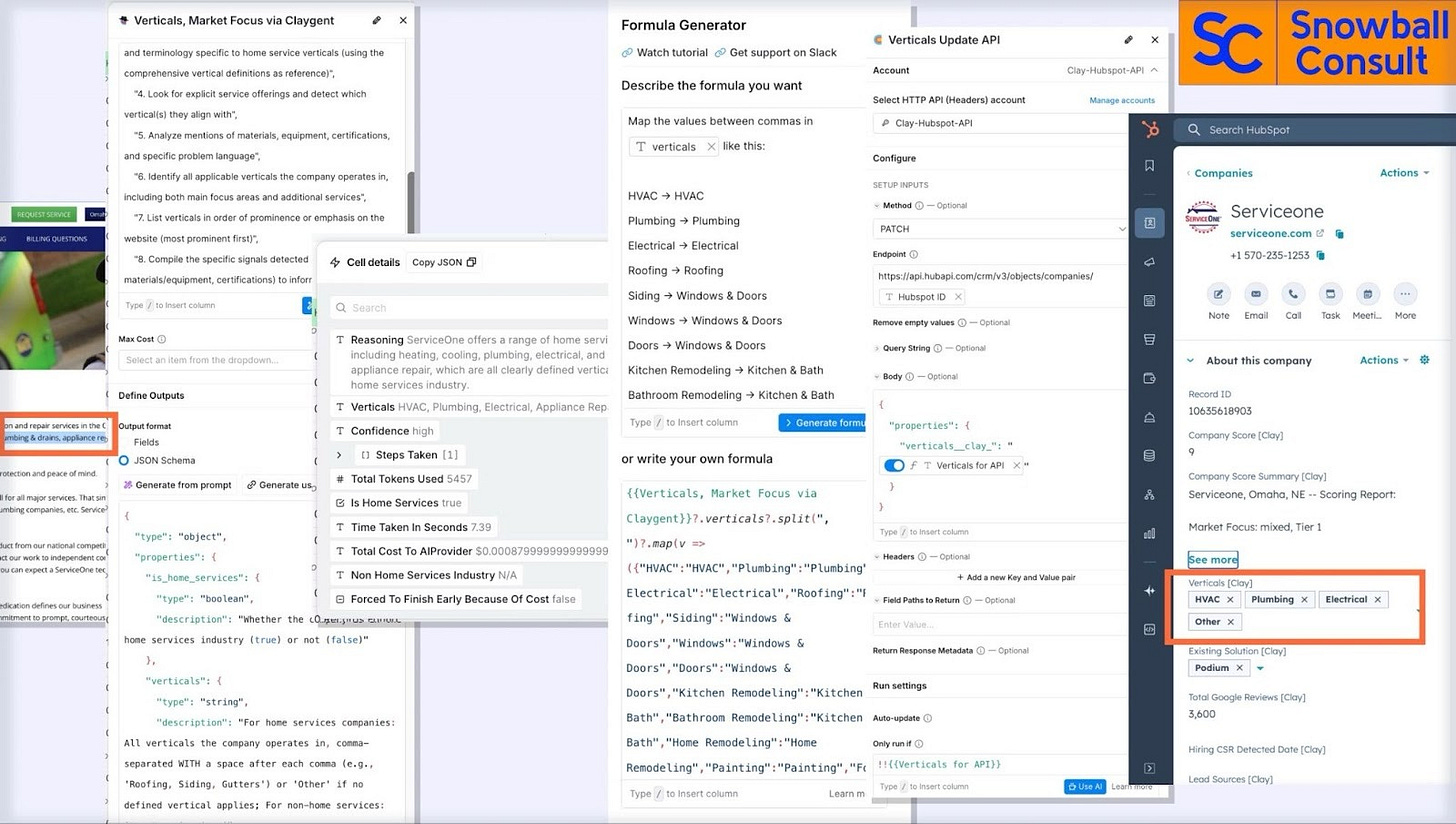

Andreas showed a simple example: a home services website with icons for heating, cooling, plumbing, and electrical. Unstructured. Messy. But when you run it through an agent, you get a HubSpot record with clean picklist values: HVAC, Plumbing, Electrical, Other. Plus a company score, a market focus classification, existing solutions detected, Google review count, hiring signals, and lead sources.

Here are the (not so simple) steps to make this happen with an agent:

That transformation is the whole game. Take what you see on a website and turn it into fields you can filter, sort, and act on.

AI Agent Misconceptions

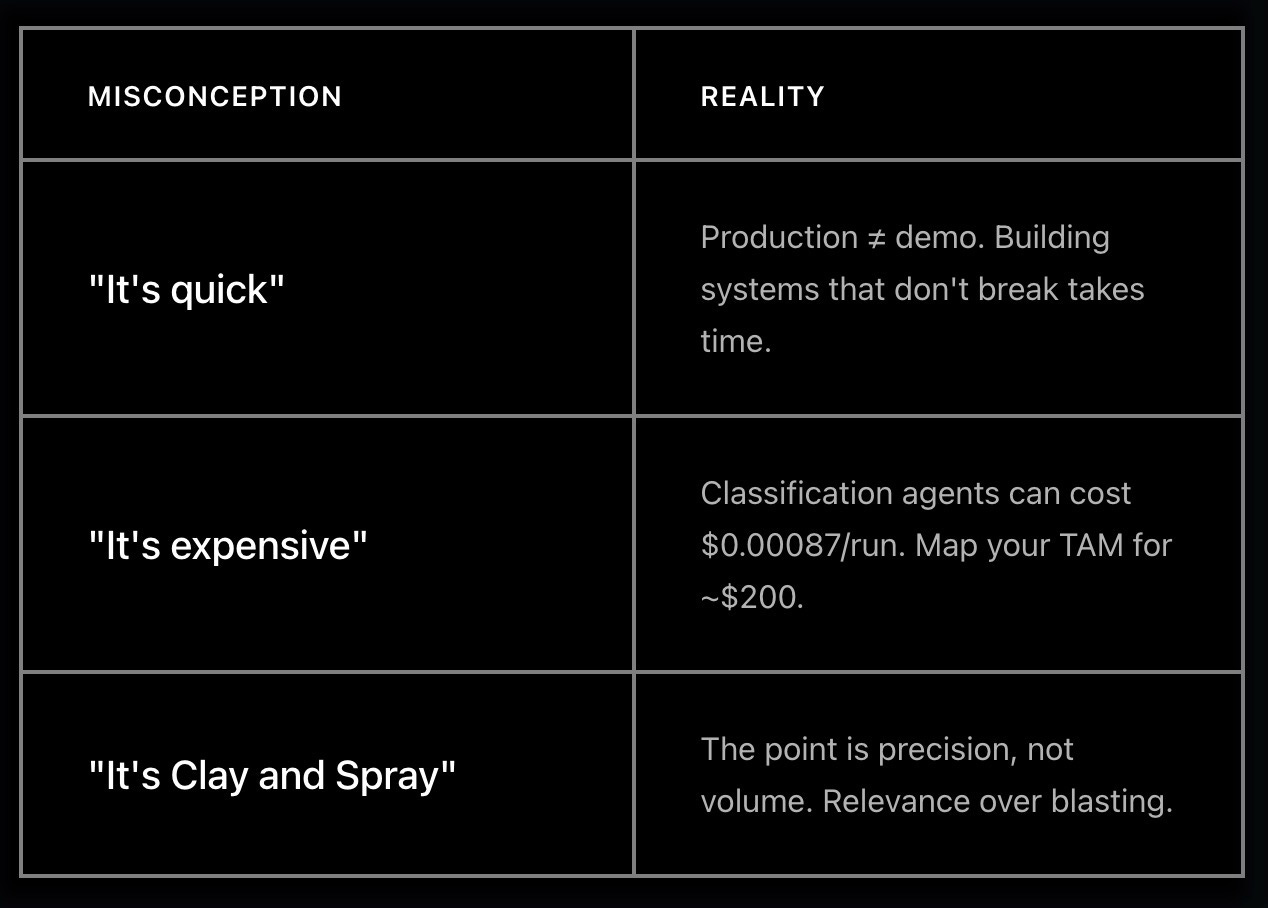

Before diving into the how, Andreas addressed three misconceptions he sees constantly.

That cost number is worth pausing on. Andreas showed a screenshot: Total Cost to AI Provider: $0.00087. Fractions of a penny per classification. When agents are built well, you can enrich your entire TAM for a couple of hundred dollars. Often, this means using your company’s LLM API instead of Clay credits (or otherwise).

The cost only runs wild when you build fast without thinking about optimization.

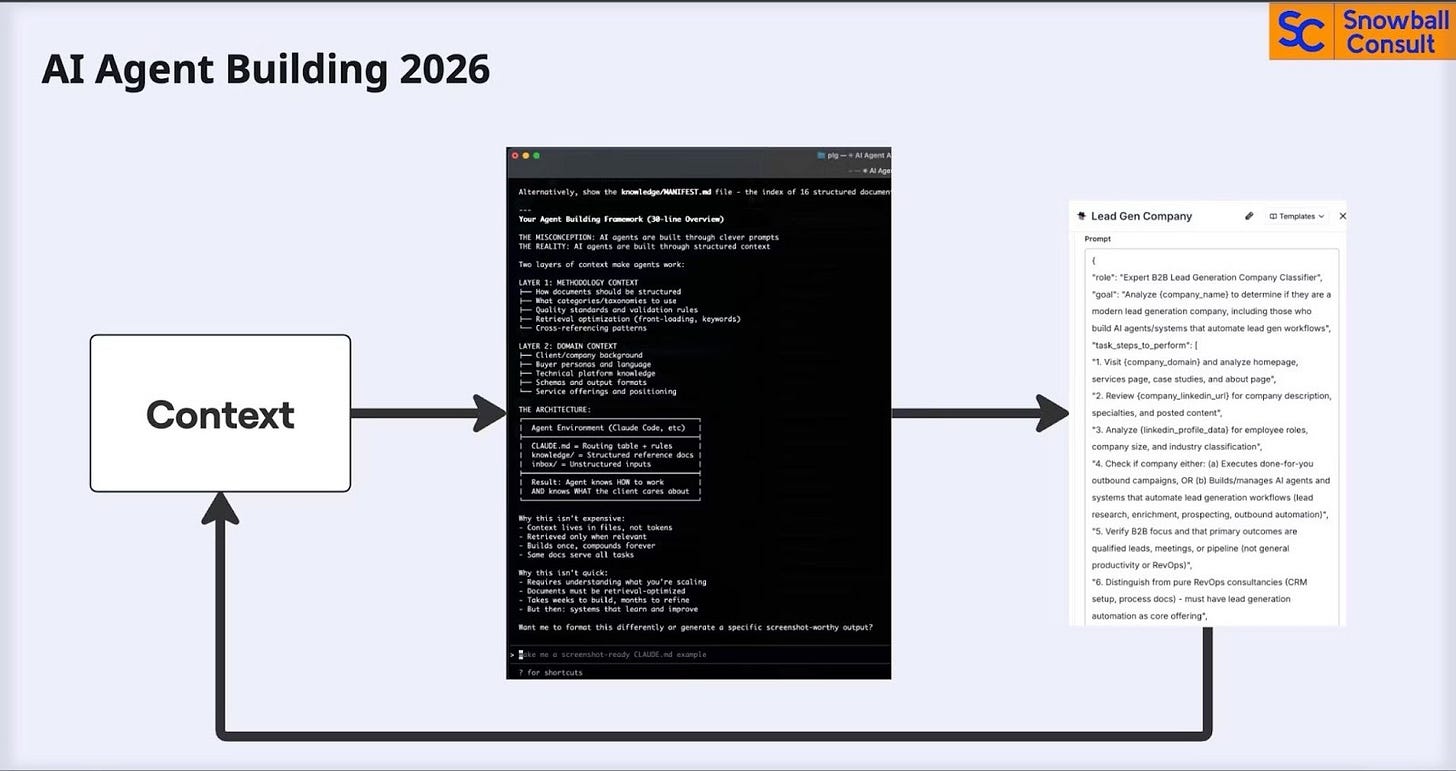

AI Agent Building 2026

Andreas shared a comparison that captured the shift happening right now.

The old process (2024):

Website → Agent Building → Agent Outputs → Data Transformation → Integration → CRM

The new process (2026):

Context → Context → More Context → Agent → Output

Context is everything. And by context, Andreas means:

Company context: What does the client care about?

Agent context: How should the prompt be structured?

Foundational documents: Deep definitions of concepts that matter to the business

Andreas doesn’t write prompts from scratch anymore. He builds foundational documents first. Deep definitions of the concepts he’s trying to classify. Then he uses those documents as context when building agents in Claude Code, asking it to generate structured prompts following his framework.

The result: agents that classify with high confidence, explain their reasoning, and cost fractions of a penny per company.

A Real Example: Qualifying LinkedIn Engagers

Andreas walked through a real workflow he built.

The scenario: Brendan (hey, that’s me! 👋) posts something on LinkedIn. 50 people engage.

The lazy approach (don’t do this):

DM all of them with AI-generated fake personalization. “Hey, noticed you liked Brendan’s post. I really love your thinking about remote work in the Caribbean. By the way, want to buy GTM engineering services?”

This contributes to the AI slop machine. It doesn’t work.

The better approach (precision targeting):

Run them through qualification gates:

Pull LinkedIn profiles

Check: Are they in a leadership role? (Andreas works best with CROs)

Look up the company

Check: Is the company the right size?

Check: Is it a lead gen company? (If yes, filter out. They’re probably competitors.)

Check: Does the company have 5+ sales roles?

Only then: Identify pain points, analyze LinkedIn posts, determine GTM topics they care about

Only then: Take action

That fifth gate is where the foundational document approach pays off. Instead of asking an LLM “Is this a lead gen agency?” (vague, inconsistent), Andreas built a comprehensive document defining what constitutes a modern lead generation company. That document becomes context for the classifier. The result: high confidence classifications that explain their reasoning.

Result: 50 engagers → 4-5 genuinely qualified prospects worth real outreach.

The Clay workflow Andreas showed had columns for each of these gates. Most rows showed “Run condition not met” because most engagers didn’t pass the filters. That’s the point. You’re not trying to reach out to everyone. You’re trying to reach out to the right people–and only the right people–with something relevant to say.

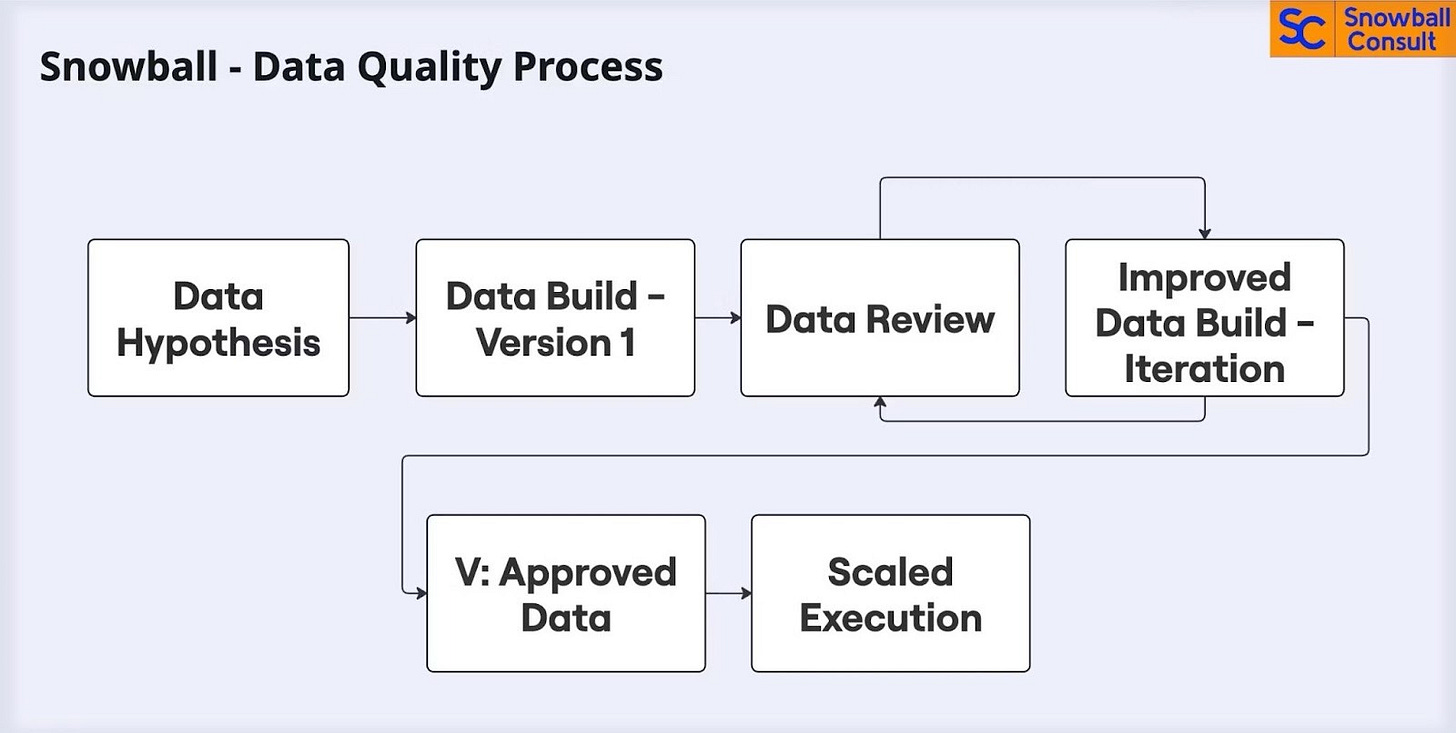

The Data Quality Process

Andreas closed with a framework for ensuring quality at scale.

It’s a loop. (Everything in GTM engineering is a loop.)

This is why these engagements aren’t 3-week projects. Building systems that work in production take iteration. It takes partnership between the builder and the GTM team. It takes patience.

But once you have it, you have something powerful. A system that can sort the messy world into your CRM. A system that captures the intuition of your best sellers and makes it available to everyone. A system that (finally) gets better over time.

The Bottom Line

My takeaway from Matthew and Andreas:

The scarcest resource in go-to-market right now isn’t data. It’s taste. Human touch. Intuition. The stuff that makes your best rep, your best rep.

AI can’t create that intuition. But it can help you extract it, structure it, and scale it.

The process:

Interview deeply

Structure the intuitions into ICP definitions

Translate those definitions into enrichable data points

Run plays and learn what works

Feed learnings back into the system

Repeat… forever

Build with intention (take foundations seriously). Build for relevance (bespoke to your market). Build with great context (what does good look like). That’s how you get outsized results.

PS

I shared this draft with Andreas to get his feedback, and we ended up having a long conversation about how much has changed in the 45 days since this session happened (from Dec 15th to Jan 30th). If you’ve been keeping up, you’ve felt this too. Claude Code, in particular, has really had a moment.

The big shift that Andreas believes (and I agree) is that building AI agents is quickly becoming commoditized (thanks to Claude Code, Claygents, and many other tools like Rox).

Which leaves the more strategic and valuable question: what should you build?

When you can build anything (not if, but when), having the human discernment to decide what to focus on at a particular GTM org is going to be the most important task in 2026. Taste.

The space is moving faster and faster. I’m excited to watch it unfold, and the wave together.

Watch the entire (22-minute) session on YouTube:

I’m grateful for Matthew and Andreas sharing so freely! It gives me energy to navigate this exciting new GTM playbook with legends like them.

Follow Matthew Silberman and Andreas Wernicke on LinkedIn.

→ Matthew runs Terra Firma, building GTM engines for seed to Series B startups backed by YC, Felicis, CRV, and Greycroft. Co-Lead Clay Club New York. And Technical Advisor at TCP.

→ Andreas runs Snowball Consult, shipping custom GTM systems for companies like Hatch, Exporty, Blueprint, and Workspan. And he’s also the Clay Club New York Lead.

They’re the real deal. So, if you’re looking for advising or consulting, I can’t recommend them enough. Please reach out to them to see if they have the capacity to work with your team.

Session #2 recap coming next: Infrastructure for agentic GTM: data stack, orchestration, and activation (PLG-focus).

Thank you for your attention and trust,

Brendan 🫡

Thanks again to the legends, Andreas and Matthew, for running this session.